Rethinking Deloitte’s HR Reimagined for Agentic AI with Real-World Guardrails

- Maryna Khomich

- Sep 3, 2025

- 7 min read

I’ve spent ~15 years in HR and recruiting across Europe — from HR Director at Viber in Belarus to running my own agency in the Netherlands and supporting high-tech scale-ups through restructurings, hybrid work arrangements, and rapid hiring. I’m practical by nature: what helps teams today and protects trust tomorrow. Reading Deloitte’s “HR Reimagined: Agentic AI for HR 2025” led me to rethink Deloitte’s HR Reimagined for Agentic AI, focusing on real-world guardrails that prioritize what actually works in fast-moving organizations and what breaks when people, context, and data quality are ignored.

Below is a simple summary of the report, followed by four “spicy” ideas it presents, along with the following details for each: what the report claims, what can actually happen in practice, the warning signs to watch, and the concrete steps I’d take as an HR expert to make it work safely.

Part I — The report in a nutshell

Why reimagine HR

HR can’t stay a paperwork function. We need to design work so machines handle repeatable tasks, and people focus on strategy, judgment, and relationships.

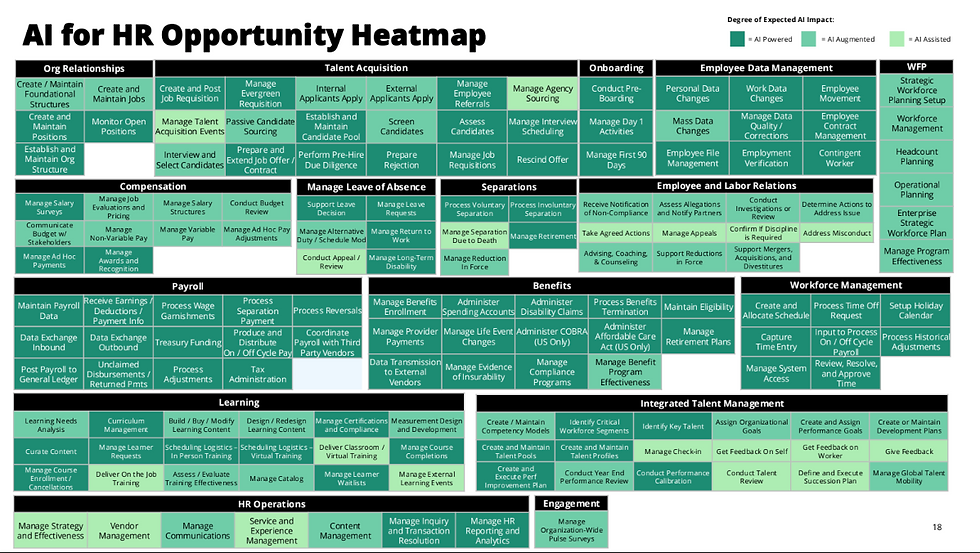

How AI reshapes HR tasks

AI-assisted: humans lead, AI supports (strategy, advising execs).

AI-augmented: handoffs between humans and AI (sourcing, knowledge upkeep, investigations).

AI-powered: AI leads, people supervise (Q&A, routine transactions, reporting, content).

What “agentic AI” means

Not just a chatbot. Agents can understand context, plan steps, call tools/APIs, talk to other agents and people, and learn from feedback. Several agents can team up to finish complex workflows end-to-end (think onboarding across HR/IT/benefits/learning).

Where value shows up

Fewer errors and faster cycles, more personalized help for employees and managers, better decisions from patterns and predictions, and clear audit trails.

How to find good use cases

Start with a business outcome (e.g., lower attrition in critical roles). Map the decisions, the knowledge/data needed, and the actions/systems involved. Prioritize high-volume, rule-based flows where outcomes are measurable.

Future HR roles (not fewer — different)

HRBPs: from “fire-fighting” to proactive, insight-driven advising with more consistent quality.

COEs: from one-off policy updates and surveys to continuous, data-driven product management of the people experience.

HR Operations: machines handle most admin; humans focus on exceptions, empathy, and improvement.

Concrete cases

Return from leave: the agent sees return dates, plans tasks, nudges the manager, updates systems, and learns from feedback.

Turnover risk: the agent flags risk, suggests budget-aware options, executes the chosen action, and learns from outcomes.

Key idea

Agentic AI is a work-design shift. Success depends on how well we shape the handoffs between people and machines — with trust and governance baked in.

Part II — Four bold claims, reality checks, warning signs, and what to do

1) Claim: AI can deliver a consistently great HR Business Partner experience

What the report says

Today, great HRBP work is patchy and depends on the individual. With AI, every HRBP can access the same quality insights and playbooks, so the baseline gets stronger and more consistent.

What can really happen (expectation vs. reality)

Consistency may improve, but context is messy. Teams have history. Power dynamics are real. Culture varies by leader. A “good answer in general” can be a “bad move here and now.”

Warning signs

Nuance lives with humans. A live person reads the room — who actually makes decisions, what the last reorg felt like, which words land badly with this VP. That context isn’t always in systems, so a “correct” answer can still damage trust.

Garbage in, garbage out. Many companies have fragmented data: duplicates, outdated records, parallel spreadsheets, custom fields, and old processes. If the inputs are shaky, the AI can confidently produce… the wrong next step, at scale.

What to do (practical steps)

Set boundaries. Allow AI for policies, market data, and options; require human sign-off for reorganizations, investigations, exec conflicts, and sanctions.

Force context. Before AI suggests anything, the workflow asks the HRBP for a short “situation brief” (stakeholders, history, sensitivities). No brief — no guidance.

Run in the shadow first. Let the agent propose answers for 6–8 weeks without sending them to the business. Compare to your best HRBPs, then tune.

Show your work. Every AI suggestion includes sources and confidence. HRBP logs why they accept/adjust/reject. Over time, the system learns your nuance.

2) Claim: 80%+ of HR operations can be automated

What the report says

AI handles most admin and transactional work; humans focus on exceptions, empathy-heavy moments, and continuous improvement.

What can really happen (expectation vs. reality)

You’ll automate a lot — but the “last 20%” is the hardest and takes time. Sensitive cases (medical leave, payroll errors, separations) still need people. And budgets, legacy contracts, and tech debt slow big changes.

Warning signs

The hard cases eat your day. Even if 80% is automated, the remaining cases are complex and emotional. They can consume more attention than you expect.

Sunk costs are real. You may be mid-cycle on HR systems. Replacing everything at once can miss ROI targets and drain change capacity.

What to do (practical steps)

Design tiers. Tier 0: guided self-serve. Tier 1: AI concierge. Tier 2: human expert. Pre-route life events and grievances to humans with clear SLAs.

Right to a human — always. Visible “Talk to a person now,” warm handoffs, and the transcript travels with the case, so employees don’t repeat themselves.

Wrap, don’t rip. Add an orchestration layer on top of legacy tools; replace back-ends only at natural renewal points.

Automate by slice. Tackle small flows with <12-month payback (name changes, verifications). Count avoided FTE growth, error cuts, and cycle-time wins — not just headcount.

Watch unit costs. Track cost per transaction; use autoscaling and caching to keep AI spend sensible

3) Claim: Real-time AI nudges can replace surveys and focus groups

What the report says

Move from occasional surveys to ongoing listening and gentle nudges that help people in the flow of work (e.g., reminders, tips, tailored help).

What can really happen (expectation vs. reality)

If it feels like surveillance, people will push back — especially in the EU. And if the nudge is tone-deaf, they’ll mute it. Fast.

Warning signs

Privacy/ethics backlash. Passive data use can feel creepy. Works councils and legal teams will question purpose, consent, and data flow.

Signal noise. Sarcasm, cultural styles, and unusual behavior can fool models. Irrelevant or clumsy nudges erode trust quicker than a bad survey.

What to do (practical steps)

Do privacy right up front. Run DPIAs, minimize data, aggregate where possible, and allow easy opt-outs. Be crystal-clear in plain language about what you use and why.

Include employees in governance. Ethics board with employee representatives, legal/DEI; quarterly audits and brief transparency notes.

Start low-stakes. Pilot nudges on training and knowledge discovery before well-being or performance.

Test and triangulate. Use control groups. Mix passive signals with short, consented micro-polls. Localize tone; review content quarterly.

4) Claim: AI can beat managers at spotting and fixing turnover risk

What the report says

The agent flags risk early (tenure, market, pay), suggests budget-fit options, executes the action, and learns from the result.

What can really happen (expectation vs. reality)

Early alerts help — but if managers treat them as “the system’s job,” accountability drops. And if the playbook is too generic, you miss what actually matters to that person.

Warning signs

Leaders stop really knowing people. If AI is the lookout, managers may rely on the feed instead of daily conversations. Culture suffers.

One-size fixes. Cash or a standard recognition badge won’t fix a growth bottleneck, a team conflict, or a caregiving need.

What to do (practical steps)

Make responsiveness visible. Put “responded to risk alerts” and “took stay actions” on manager scorecards. Review monthly at the exec table.

Require a human check-in. Within 10 business days of an alert, the manager does a 1:1 and records context the model can’t see.

Personalize the menu. Blend risk drivers with stated preferences (skills, mobility, learning). Allow manager overrides with a short note; feed outcomes back to improve the model.

Offer levers beyond cash. Lateral moves, stretch work, mentorship, protected focus time, and schedule flexibility. Encode these so they’re easy to pick and track.

Run fairness checks. Watch who gets what interventions across demographics and levels; avoid over-relying on budget fixes.

Part III — Non-negotiable guardrails for any agentic AI program

Human in the loop, always for high-impact calls. If a decision affects jobs, pay, privacy, or legal risk, a person stays in charge. AI can draft and suggest, but a human reviews, approves, or stops it. This keeps accountability clear and protects trust.

Simple “model cards” and clear controls. For every AI use, keep a one-pager: what it’s for, what data it uses, known risks, and how we monitor it. Test the system against tough scenarios and keep a rollback plan ready. Update this card as the model or policy changes.

Live health checks on the system. Track basics in real time: accuracy, speed, how often it hands off to a person, satisfaction, and complaints. If numbers drift, pause the feature with a kill switch, fix prompts or data, then relaunch. Review trends regularly, not once a year.

Change management that people can feel. Get visible executive backing, train managers, and give everyone a help hub with short demos, FAQs, and a place to leave feedback. Communicate in plain language and ship small improvements quickly so people see progress.

Data quality with teeth. Agree on a few simple data health metrics (freshness, completeness, reconciliation). If data drops below the bar, watermark the output or switch the agent off until it’s fixed. Tie vendor SLAs and team OKRs to these numbers so quality is everyone’s job.

Bottom line

Agentic AI can raise the baseline, speed up service, and surface better decisions, if we design the seams with care. Think less “more automation,” more clear boundaries, honest privacy, human judgment where it matters, and constant learning loops. That’s how you get real value without breaking trust.

Comments